Projects

Defocus estimation in natural images

Vision begins with a lens system that focuses light near the retinal photoreceptors. A lens can focus light perfectly only from one distance. Natural scenes have objects at many distances, so regions of nearly all images will be at least a little bit out of focus; that is, defocus blur is present in almost every natural images. Defocus is used for many biological tasks: guiding predatory behavior, estimating depth and scale, controlling accommodation (biological auto-focusing), and regulating eye growth. However, before defocus can be used to perform these tasks, defocus itself must first be estimated.

Surprisingly little is known about how biological vision systems estimate defocus. The computations that make optimal use of available defocus information are not well-understood, little is known about the psychophysics of human defocus estimation, and almost nothing is known about its neurophysiological basis. Very little existing work has examined defocus estimation with natural stimuli. Given that defocus may be the most widely available depth cue on the planet, we decided to dig into the problem.

We have developed a model of optimal defocus estimation that integrates the statistical structure of natural scenes with the properties of the vision system. We make empirical measurements of natural scenes, account for the optics, sensors, and noise of the vision system, and use Bayesian statistical analyses to obtain estimates of how far out of focus the lens is. From a small blurry patch of an individual image we can tell how out-of-focus that patch of image is. Our results have implications for vision science, neuroscience, comparative psychology, computer science, and machine vision.

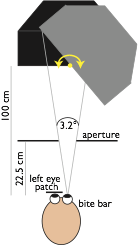

We are currently investigating human defocus discrimination in natural images using psychophysical methods and a custom built three-monitor rig. We are also developing improved algorithms for auto-focusing digital cameras and other digital imaging systems, without need for specialized hardware or trial-and-error search.

-

• Talks

-

• Research

-

• Teaching

-

• Music

-

• Patents

-

• People

-

• Code

-

• Projects

-

• Demos

-

• Press

-

• Contact

-

• CV

Natural scene statistics & depth perception

Visuo-motor adaptation

Visuo-haptic calibration

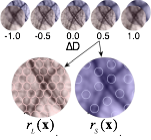

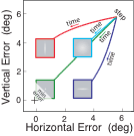

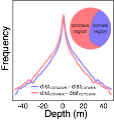

The human visual system has internalized a counter-intuitive statistical relationship between the shapes of object silhouettes and depth magnitude. In natural scenes, large depth discontinuities are more likely across edges bounding objects with convex than concave silhouettes (e.g. dinner plate vs crescent moon). In controlled experiments, we presented depth steps defined by disparity that were identical in every respect except the shape of the bounding edge. Human judgments of depth magnitude were biased in a manner predicted by the natural scene statistics.

Rapid reaching to a target is generally accurate but also contains random and systematic error. Random errors result from noise in visual measurement, motor planning, and reach execution. Systematic errors result from systematic changes in the mapping between the visual estimate of target location and the motor command necessary to reach the target. These systematic changes may be due to muscular fatigue, or a new pair of spectacles. Humans maintain accurate reaching by recalibrating the visuomotor system, but no widely accepted computational model of the process exists. Given certain boundary conditions, the statistically optimal solution is a Kalman filter. We compared human to Kalman filter behavior. Their behaviors were similar: more variation in systematic error increased recalibration rate; more measurement uncertainty decreased recalibration rate; spatially asymmetric measurement uncertainty even caused different recalibration rates in different directions. We conclude that the relative reliability between the statistical variability of signals, and the relative uncertainty of error signals predicts the rate at which human observers recalibrate their visuo-motor system.

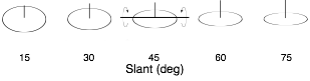

We are also preparing a manuscript on slant perception that follows similar logic. First, we measured the distribution of slants in natural scenes. Next, we collected human psychophysical data on a custom psychophysical rig. Then we compared the pattern of biases in human slant perception to the pattern predicted by an ideal observer that had internalized the natural scene statistics. We found that humans behave as if they have internalized a close approximation to the naturally occurring distribution of slants.

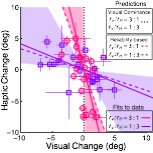

When visual and haptic cues are miscalibrated (signals disagree on average), the perceptual system re-calibrates so that the two signals more closely agree. We ran a carefully controlled study that measured the reliability (i.e. inverse variance) of visual and touch cues to slant. Then, under the condition in which the cue reliability was matched, we placed the cues in constant conflict (e.g. touch always indicated +5º than vision). After extensive adaptation, the calibration of the cues was remeasured. We found that the visuo-haptic system adapted so that the internal estimates of visual and haptic frontoparallel more closely matched under the conflicting conditions.

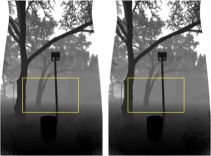

Disparity estimation in natural stereo-images

The left and right eyes have different vantage points on the world. Binocular disparities are the differences in the left and right images eyes due to these different vantage points. In part because of the recent stereo-3D movie craze, disparity is the depth cue best known to the general public. It is by far the most precise depth cue available to humans. Large psychophysical and neurophysiological literatures exist on disparity processing.

Disparity is used to fixate the eyes and to estimate depth. However, before binocular disparity can be used in service of these tasks, the disparities themselves must first be estimated. To do that, it must be determined which part of each eye's image corresponds to the other. This problem is known as the correspondence problem. Once correspondence is established, it is straightforward to determine the disparity- i.e. subtract the position of a corresponding point in the right eye from its analog in the left. Establishing correspondence, however, is a difficult problem and one that has not been attacked systematically in natural images.

There have been many attempts at solutions. Initial attempts were 'feature-based'. They picked out features readily identified in both images (e.g. a strawberry) and went from there. These theories, however, were hard to make rigorous in absence of a homunculus. These days, local cross-correlation (which provides a measure of local image similarity) is considered the most successful computational model of the correspondence problem. It has been successfully used to predict multiple aspects of human performance. In neuroscience, the disparity energy model is widely accepted as an account of disparity sensitive mechanisms in visual cortex. However, the rules that optimally link the responses of binocular neurons to estimates of disparity remain unknown.

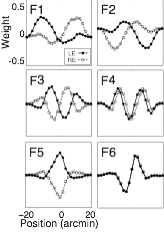

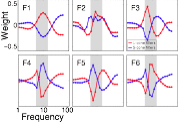

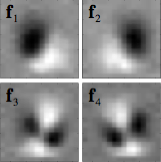

We developed a framework for (i) determining the optimal receptive fields for encoding image information relevant for processing disparity and (ii) processing the encoded information optimally to obtain maximally accurate, precise estimates of disparity in natural images. The optimal filters bear strong similarities to receptive fields in early visual cortex; the optimal processing rules can be implemented with well-established cortical mechanisms. Additionally, this ideal observer model predicts the dominant properties of human performance in disparity discrimination tasks.

This work provides a concrete example of how neurons exhibiting selective, invariant tuning to stimulus properties not trivially available in the retinal images (e.g. disparity) can emerge in neural systems. Cortical areas having such neurons have also been fundamentally important for systems neuroscience. Neurons The capacity for constancies is ability to represent certain behaviorally relevant variables as constant given great variation in the retinal images. Such constancies are one of the great successes of sensory-perceptual systems.

Visual adaptation

The influence of a visual cue on depth percepts can change after extensive tactile training in an unnatural environment. In natural scenes, the convex side of an edge is more likely to be the near side. Similarly, the concave side of an edge is more likely to be farther away. These facts are true because most objects are bounded and closed (i.e. objects are on average convex). The visual system has internalized this statistical regularity and uses it to estimate depth. For example, for identical disparity-defined depth step, more depth in perceived if the near surface has a convex silhouette than if it is concave. However, after extensive training in a virtual environment (~60 minutes) in which concave surfaces are always nearer, the affect of convexity on depth percepts is nearly reversed.

Estimating surface orientation from local cues in natural images

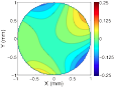

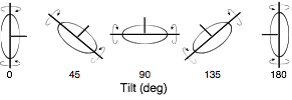

Estimating the 3D shape of objects is a critical task for sighted organisms. Accurate estimation of local surface orientation is an important first step in doing so. Surface orientation must be described by two parameters; in vision research, we parameterize it with slant and tilt. Slant is the amount by which a surface is rotated out of the reference (e.g. frontoparallel) plane. Tilt is the direction in which the surface is receding most rapidly. For example, the ground plane straightahead is a frequently encountered surface having a tilt of 90 deg. (Note: tilt is given by the projection of the surface normal into the reference plane, see below).

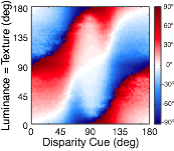

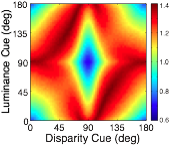

Many image cues provide information about surface orientation, but most are quite poor in natural scenes. The visual system nevertheless manages to combine these cues to obtain accurate estimates of shape. Thus, it is critical to understand how multiple cues should be combined for optimal shape estimation. In this work, we examine how gradients of various image cues— disparity, luminance, texture—should be combined to estimate surface orientation in natural scenes. We start with tilt.

A rich set of results emerges. First, the prior probably distribution over surface tilts in natural scenes exhibits a strong cardinal bias. Second, the likelihood distributions for disparity, luminance, and texture are each somewhat biased estimators of surface tilt. Third, the optimal estimates of surface tilt are more biased than the likelihoods, indicating a strong influence of the prior. Fourth, when all three image cues agree, the optimal estimates become nearly unbiased. Fifth, when the luminance and texture cues agree they often veto disparity in the estimate of surface tilt, but when they disagree, they have little effect. We are now using these results to design and run experiments to test whether humans estimate tilt in accordance with the minimum-mean-squared-error estimator.

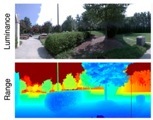

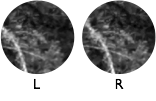

Database of stereo-images of natural scenes with co-registered range data

Much of our work on estimating and interpreting depth cues relies on knowing what the right answer (or groundtruth) is. For example, to evaluate the accuracy of a depth-from-disparity algorithm, it is necessary to know with certainty what the true disparities are. To obtain such a database, we constructed a Frankensteinian device. The device consisted of a digital SLR camera mounted atop a laser range scanner all mounted atop a custom robotic gantry--the vision science equivalent of a turducken (i.e. turkey stuffed with chicken all stuffed with duck). We used the robotic gantry to capture stereo-image-scans of the scene from 6.5cm apart, a typical distance between the human eyes. We also used the gantry to align the nodal points of the camera and range scanner for each eye's view. This technique prevents half occlusions, regions of the scene that are imaged by the camera but not the scanner and vice versa. The result is a database made up of low-noise (200 ISO), high-resolution (1920x1080; 91 pix/deg), color-calibrated images with accurately co-registered range data at each pixel (error = +/- one pixel). We hope the database will serve as a useful resource for the research community.

Speed estimation in naturalistic image movies

The images of objects in the world drift across the retina at different speeds dependent on how far away the objects are from the viewer. For example, when looking at the mountains out the window of a car traveling down the highway, the image of the guard rail is drifting across your retina very quickly, the image of cows in the pasture is drifting more slowly, and the image of the mountains in the distance are hardly drifting at all. Motion parallax is the term used to refer to these differential speeds. It is an important and ubiquitous cue to depth.

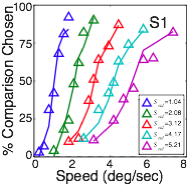

Before depth can be computed from motion parallax, the speed of motion at each retinal location must be determined. To obtain maximally accurate estimates of the speed at each retinal location, we developed an ideal observer model that incorporates the statistics of natural stimuli and the constraints imposed by the front-end of the visual system. Estimation performance is unbiased, and decreases in precision as speed increases.

We pitted ideal and human observers against each other in matched speed discrimination tasks. Both types of observers were shown the same large, random set of natural image movies. Human performance closely tracks ideal performance, although ideal performance was somewhat more precise. However, a single free parameter, which accounts for human computational inefficiencies (e.g. internal noise), provides a close quantitative account of the human data. This result shows that a task-specific analysis of natural signals can quantitatively predict (to a scale factor) the detailed features of human speed discrimination with natural stimuli.

The classic approach to modeling perceptual performance is a two step process. First, one collects a set of behavioral data by presenting the same (or a small number of) stimuli many hundreds of times, Second, one develops a model that fits the data. This approach has been useful because it provides compact descriptions of large, complicated datasets. However, this approach does not provide a gold standard against which human performance can be benchmarked.

By measuring the task-relevant properties of natural stimuli and the constraints imposed by the visual system's front-end, the great majority of human behavioral variability can be explained from first principles. Additionally, in mid-level visual tasks, the human visual system can be shown to approach a theoretical limit imposed by stimulus uncertainty and the constraints of the early visual system. Our hope is that this approach will lead to the development of lawful theories of both human sensory-perceptual performance with natural stimuli, and of the underlying neurophysiological mechanisms.

Database of stereo-images of natural scenes with co-registered range data

Task-specific statistical methods for dimensionality reduction

In real estate, consider the task of determining whether a particular house is likely to increase in price. The likelihood that a house will increase in price may depend on its location, its size, its design, its materials, its condition, etc. But exactly how future price depends on the attributes listed above is not known. Some factors may be very predictive and some may have no predictive value. Furthermore, particular combinations of several factors may have more predictive power than any one individual factor. How does one determine the most useful feature combinations for estimating future house prices?

A similar issue exists in vision. Different stimulus features are useful for different sensory-perceptual tasks. For example, the relative activation of different color channels is useful for estimating hue and saturation, but is unlikely to be useful for estimating the speed of image motion. In the past, researchers chose stimulus features to study in the context of specific tasks because of intuition and historical precedent. We are developing and improving statistical methods for finding optimal stimulus features automatically. This is an exciting, blossoming, and foundational topic that has the potential to open up the study of natural and naturalistic stimuli in research on sensation and perception